Biography

Interests

Khin-Htun, S.1* & Than, H.2

1Medical Education Fellow and Honorary Assistant Professor, University of Nottingham, UK

2Trust Grade Doctor, Queen’s Medical Centre, Nottingham University Hospitals, Nottingham, UK

*Correspondence to: Dr. Swe Khin-Htun, Medical Education Fellow and Honorary Assistant Professor, University of Nottingham, UK.

Copyright © 2019 Dr. Swe Khin-Htun, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

The development of clinical reasoning (CR) skills in the medical students can be influenced by many factors. One of the attributes which is suggested to play an important role in CR skills is gender.

To ascertain further insight into this theory, the marks of the CR questions in the summative knowledge exam papers of third year, i.e., clinical phase 1 (CP 1), and final year, i.e., Clinical Phase 3 (CP 3) medical students from the University of Nottingham (UoN) were reviewed.

Data on how well the male and female students performed on CR questions were collected and analyzed across three categories of school years (2012, 2013 and 2014) for CP 1 and (2014, 2015 and 2016) for CP 3.

In summary, the results of CR scores of summative written exams for both genders showed no significant difference in both CP 1 and CP 3 datasets for three cohorts.

In conclusion, the gender has no impact on the development of CR in medical students.

Abbreviations (if used)

CR: Clinical reasoning

CP1: Clinical phase 1, the first clinical phase

CP3: Clinical phase 3, the third and final clinical phase

UoN: The University of Nottingham

Introduction

The Clinical Reasoning (CR) is defined as a recursive, multidimensional, and complex process which involves

informal and formal strategies for the analysis of patients’ information and evaluation of its information

[1]. Medical errors as an effect of faulty reasoning lead to mortality and morbidity of the patients

and care users [2]. CR skills are commonly acquired through experiential learning of Undergraduate (UG)

students within the course of undergoing the clinical curriculum. However, CR development has not been

formally taught in the past.

The development of Clinical Reasoning (CR) can be influenced by many factors. A research conducted by Karwowski, Gralewski, and Szumski (2015) [3] revealed that CR is different with respect to the gender of students. The male students have been found having better CR skills than their female student counterparts have [4]. In a similar study, gender also played a role in rationality of decision-making, wherein male students are more rational decision-makers as compared to female counterparts [5]. Although in some circumstances the female students outdo their male counterparts, the male students have a better understanding of the perspectives of CR. The female students show more interest in learning the ideas, concepts, theories, and contents of clinical curriculum, but the males are observed to have more potential to implement all the concepts and potentials in their real and professional life [3]. Baron-Cohen et al. (2015) [4] argued that perception of the nursing curriculum, contents, practices, theories, and concepts differs with respect to the gender of students. Sometimes, the male students show more compatibility and potential in developing the CR than the females. Moreover, Siani and Assaraf (2016) [6] claimed that psychological characteristics of male and female students differ from each other with respect to learning and development of CR skills. These findings are supported by the study of Doane, Kelley and Pearson (2016) [7].

CR Problems (CRP) and Diagnostics Thinking Inventory (DTI) were used to assess the gender effect on CR ability of medical students in a study conducted by Groves, O’rourke and Alexander (2003) [8]. The result of the study showed that female students were found to have better ability only for CRP, but not for DTI although they were a positive predictor of CR ability. This is likely because of their more careful and thorough approach in diagnosis, which results in deliberate identification of all critical features from case presentation [8]. Not only female medical students, but also female nursing students showed better ability in resolving problems and facing the critical situation [9].

On the other hand, in medical and dental literature, it is showed that gender has no influence in the ability of CR in Undergraduate (UG) students [10-15].

In a lot of research, CR is compared between male and female students using experimental and research assessment methods. However, the unique aspect of our study is the use of multipurpose standardized assessment tools (in this case, we use summative written exam papers). They enable assessment across a predetermined set of problems and can sample a broad set of content domains within limited testing time.

Materials and Methods

The research question is “Does gender influence outcomes as measure by summative written exams?”

There are different types of assessments of CR such as, experimental and research methods, standardized assessments and workplace-based assessments. Standardized assessment tools are divided into multipurpose assessment tools and tools with specific aims to assess CR. Multipurpose assessment tools include standardized examinations such as written papers or objective structured clinical examinations (OSCE). They enable assessment across a predetermined set of problems, but take place in an artificial environment and do not assess all aspects of CR.

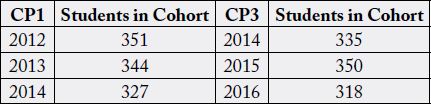

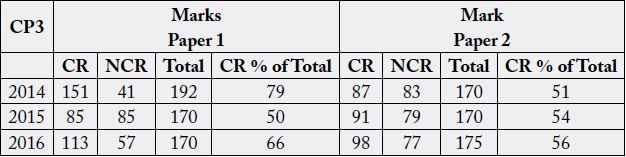

In this study, standardized knowledge written exam papers were used to compare the effect of gender. The Clinical phase 1 (CP1) students and The Clinical phase 3, CP3 students have to sit summative knowledge paper at the end of their clinical phase. CP1 has one knowledge paper but CP3 has 2 knowledge papers. The performance on the portion of these summative knowledge papers which are predominantly CR questions of CP 1 and CP3 students were compared to see the effect of gender on the development of CR skills. There are three cohort groups in each stage: CP 1 (2012, 2013 and 2014) and CP3 (2014, 2015 and 2016). As study started in 2011 and ended in 2016, the average number of students per each cohort is between 318- 351 [Table 1].

Firstly, the summative knowledge papers are reviewed and categorized into CR or no-CR questions during

standard setting meetings, which are conducted by 15-25 experts including specialists from different

specialties such as gastroenterology, respiratory medicine, general surgery as well as GPs, Director of clinical

skills, clinical teaching fellows, module leads, medical educators and some junior doctors. We have invited all

the doctors, educators from the different trust where University students are clinically attached to. It was led

by the Director of assessment. The number of the raters varied from 25-35. Their specialities are varied, and

their levels are varied from F1 to clinical lead by each occasion. Mainly, it is mandatory for very experienced

clinical lead, course directors and education fellows (who have explicit education role in trust) to attend

most of the meetings. We want variety so we open up for other clinicians too. If any discrepancies arise

regarding questions whether they are CR or non-CR during standard setting meetings, the team not only

takes Bloom’s taxonomy of learning domains into account, but also maps these questions against the three

statements 8c, 8g and 14f on ‘Outcomes for Graduates from Tomorrow’s Doctors’ published by GMC to

categories the questions. Then, these questions are discussed until mutually agreed. Only the questions that

assess the third category (apply) to the sixth category (evaluate) of Bloom’s cognitive processes are accepted

as CR questions.

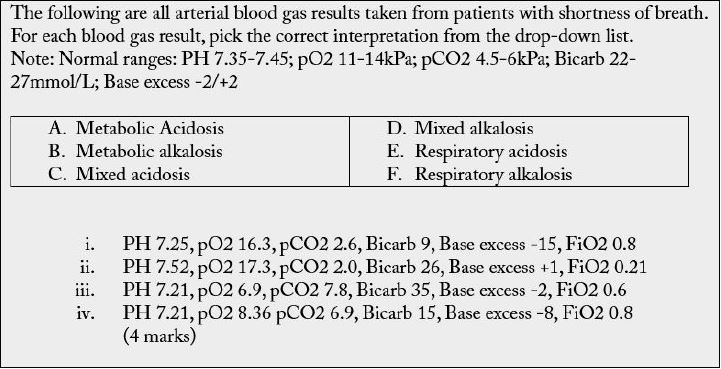

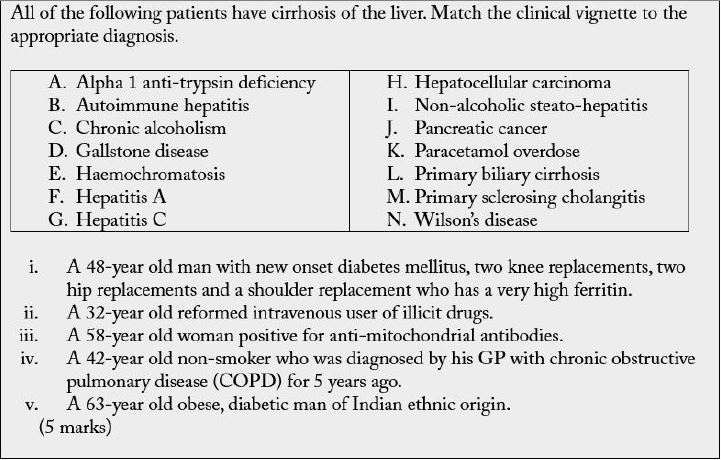

For example, in the first question (Table 2) the students can answer this by using their knowledge alone but in the second set of questions (Table 3), the students need to mobilise their knowledge and apply their knowledge in the clinical context to answer the questions so we assume only these types of questions as CR questions and used to compare in this study.

In the final data, CR questions can cover: being given a history and being asked to formulate the diagnosis for each case; being given physical findings and being asked to choose the most likely diagnosis; being given investigation results and being asked to find a diagnosis and provide the treatment plan; being given a diagnosis and being asked to choose the matching case vignette or history; being given a history and being asked to match the investigation findings to the interpretation of the findings.

For each exam paper, routine psychometric analysis is carried out to ensure a high-quality assessment tool.

Classical Test Theory (CTT) and Item Response Theory (IRT) are used to conduct the analysis of ‘the

post examination psychometric data’. Problematic test items which are either too difficult or too easy are

identified by using Student-item maps. Test-score reliability (Cronbach’s alpha), item discrimination index

(ID) and standard error of measurement (SEM) are also used to analyses knowledge papers.

In addition, for each paper, not only frequency and discrimination (U-L), but also learning objective analysis are used to inspect. Item difficulty (p) and discrimination value (d) are used to calculate for each item. If the items have low discrimination value (d <0.15) and high level of difficulty (p <0.2), they are excluded. Generalizability (G) theory (student x item) is used to measure the reliability of the test. Furthermore, descriptive statistics (sample characteristics according to gender/course) and item analysis (item discrimination, generalizability and decision studies) are used to check for each item. Whether there is any correlation between cases and mean marks are also considered.

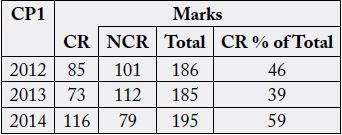

External and internal examiners review the paper carefully. If there is any comment for some questions, those comments are considered carefully to decide whether further action is needed to be taken. The final scores are provided after these steps are taken. The following tables provide the overall results for the CP1 and CP3 (table 4 and table 5).

These exam papers are not designed specifically for this research but the researcher used the opportunity to review these exam papers to identify CR questions and compare the effect on gender so there are many data sets with different numbers of students, different summative knowledge exam papers and different components of CR marks in each cohort. The nature of the data we have, we are conducting an independent sample t-test wherein we are comparing the group means instead of conducting a pairwise analysis of the difference of scores. The total raw scores reflect the weight of the correct answers.

A level of significance of 0.05 was also used in the independent sample t-test.

The ethical committee confirmed that the approval from the department was not required as this kind of project is classified as service evaluation.

Results

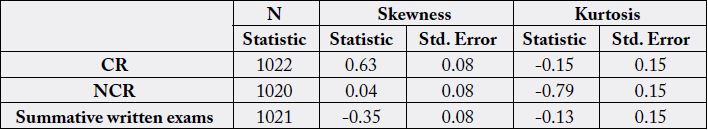

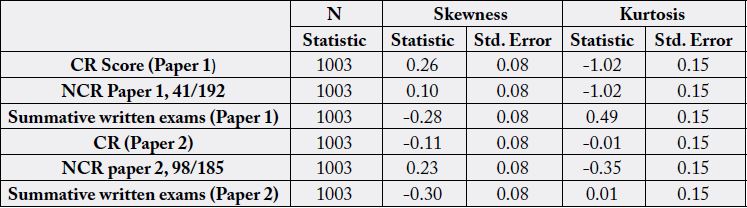

An independent sample t-test was conducted to address the research objectives. Therefore, normality testing

was conducted by investigation of the skewness and kurtosis statistics and histogram to check the distribution

of data of the different dependent variable. To determine whether the data follows normal distribution,

skewness statistics greater than three indicate strong non-normality and kurtosis statistics between 10 and

20 also indicate non-normality [16]. As can be seen in Table 4 and 5, the range of values of the skewness

(-0.35 to 0.63) and kurtosis (-0.79 to -0.13) statistic for the dependent variables of CR scores, NCR scores,

and total scores of summative written exam for the CP1 dataset; and also the range of values of the skewness

(-0.30 to 0.26) and kurtosis (-1.02 to 0.49) statistic for the dependent variables of CR scores, NCR scores,

and total scores of summative written exam for papers 1 and 2 for the CP3 dataset were in the acceptable

range which showed that all the data of these dependent variables exhibited normal distribution. Thus, the

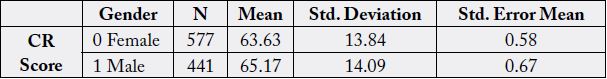

parametric statistical analyses can be conducted [Table 6,7]. According to the nature of our study, we are

not comparing each year but we put all CP1 from 3 cohorts and all CP3 from 3 cohorts together and that

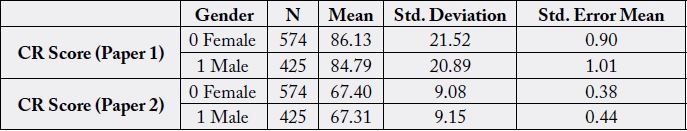

accounts 577 female students and 441 male in CP1 [Table 8]. For CP3, 574 female and 425 male in total

are involved in this study [Table 9].

In order to prevent data overload, only CR questions are compared and NCR questions are out of our study

An independent sample t-test was conducted to determine whether the gender influence outcomes as

measured by the CR score in the summative written exam. Also, different analyses were conducted on the

dataset of CP1 and CP3.

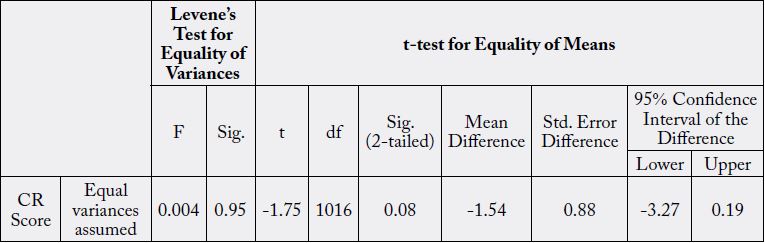

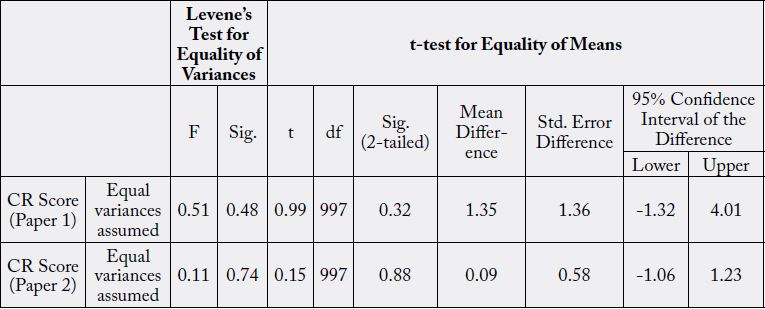

Table 10 summarized the results of the independent samples t-test for the difference of the CR score in the summative written exams by gender in the dataset of CP1. The results of independent sample t-test showed that CR score of the summative written exam (t(1016) = -1.75, p = 0.08) were not significantly different between males and females. Table 11 summarized the results of the independent samples t-test for the difference of the CR score in the summative written exams by gender in the dataset of CP3. The results of independent sample t-test also showed that CR scores of the summative written exam in paper 1 (t(997) = 0.99, p = 0.32) and paper 2 (t(997) = 0.15, p = 0.88) were both not significantly different between males and females. With this result, the null hypothesis for research question three that there is no significant effect from gender on outcomes as measured by summative written exams was not rejected.

Discussion

The research question asked “Does gender influence outcomes as measured by summative written exams?”

The results of the data analysis revealed that there were no significant difference between males and females

in terms of outcomes as measured by summative written exams.

Based on the literature, gender differences in CR have been found, with men exhibiting higher CR. For instance, Karwowski et al. (2015) [3] found that the development of CR is different with respect to the gender of students. The male students have been found having better CR skills than their female student counterparts have [4]. In a similar study, gender also played a role in rationality of decision-making, wherein male students are more rational decision-makers as compared to female counterparts [5]. These studies did not support the results of current study, revealing no gender differences in the CR of men and women.

One of the unique results that came out from this study was the lack of gender differences in the CR of male and female students in terms of improvements in their CR. This means that no curricular modifications may be necessary to be taken into consideration to take into account possible gender differences in the development of CR. This finding is a contrast to previous studies suggesting that CR tends to be different between men and women [3,4].

The recommendation is to formulate an assessment plan or rubric that captures the conceptual components of CR. The generation of an assessment rubric of CR can facilitate better redesigning of courses that would target specific weaknesses based on student outcomes. Assessing students for CR should come from many sources because it consists of multiple traits such as knowledge, skills, problem solving etc., and each trait is best assessed by a specific tool [17-20].

Limitation

There is no gold standard for the assessment of CR. The data in this paper uses purely summative knowledge

papers as a proxy measure; all the different aspects of CR could not be assessed. There are many data sets

with different numbers of students, different summative knowledge exam papers and different components

of CR marks in each cohort. The nature of the data we have, we are conducting an independent sample t-test

wherein we are comparing the group means instead of conducting a pairwise analysis of the difference of

scores. The total raw scores reflect the weight of the correct answers.

Conclusions

In conclusion, it can be stated that both genders have potential to develop the CR skills. Some studies

provide enough evidence about the effective development of CR amongst male students, whereas, others

favor that the female students have more potential than the male students do. Meanwhile, some show lack

of effect of gender on development of CR. All these possible scenarios clearly demand more research in

order to understand the extent to which gender can influence on the development of CR in the learning

environment and/or decision making in real world practice.

Bibliography

Hi!

We're here to answer your questions!

Send us a message via Whatsapp, and we'll reply the moment we're available!