Biography

Interests

Lindsay, G.1,2*, Quincey, L.2, Amal, Y.2 & Cayetano, S.2

1School of Nursing, Umm Al Qura University, Makkah, Saudi Arabia

2Al Hada Armed Forces Hospital, Taif Region, Saudi Arabia

*Correspondence to: Dr. Lindsay, G., School of Nursing, Umm Al Qura University, Makkah, Saudi Arabia.

Copyright © 2018 Dr. Lindsay, G., et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Artificial Intelligence (AI) based applications are growing rapidly in a variety of healthcare delivery systems and processes. Healthcare practitioners and those requiring health care are likely to be directly impacted by these developments in the near future. Making the new technology more difficult to understand is the new vocabulary that is evolving in this area of science and technology.

The objectives of this topic review are to provide an understanding of what is meant by the entity ‘artificial intelligence’ and how it is being created and used to enhance healthcare delivery. This includes an overview of the key resources, terminology and assumptions used in the preparation of AI components for use in various healthcare disciplines and settings as well as other applications. The range of inter-connectivity with a variety of data sources and outputs are presented highlighting the strengths and limitations that AI powered healthcare devices may offer in the healthcare field.

This review provides an overview of AI in a range of emerging digital applications within and beyond healthcare practice and its interface between healthcare practitioners and those requiring healthcare interventions. Some of the challenges and opportunities for improved patient care from a healthcare standpoint are discussed together with examples of areas where greatest applications are being achieved. These developments have the potential to influence the direction and delivery of healthcare. The goals in introducing AI resourced processes and devices in healthcare are to improve efficiency while maintaining quality healthcare for the population at an affordable cost. Through a clearer understanding of the underpinning technology, its capacity, capability, complexity and concerns, better informed decision-making can facilitate the effective integration of this new technology.

Abbreviations (if used)

AI - Artificial Intelligence

IBM - International Business Machines

CT - Computerized (Axial) Tomography

ANN - Artificial Neural Network

RSA - Rivest, Shamir, and Adelman

ML - Machine Learning

DL - Deep Learning

IOT - Internet of Things

IED - Intelligent Electronic Device

NPL - Natural Language Processing

BT - Bleeding Time,

BP - Blood Pressure

HR - Heart Rate

RR - Respiratory rate (BP)

MRI - Magnetic Resonance Imaging

GPS - Global Positioning System

AHP - Associated Health Professionals

GDP - Gross Domestic Product

FBI - Federal Bureau of Investigation

TSB - Trustee Savings Bank

NHS - National Health Service

IT - Information Technology

GPU - Graphical Processing Unit

Introduction

Artificial Intelligence (AI) is the concept of machines behaving intelligently by which is meant behaving in

a way that a human would regard as ‘smart’ [1]. The capability of AI has been tested in numerous laboratory settings and device specific performances. One of its most public and universally agreed intellectually

difficult tasks was the defeat in 1997 of Garry Kasporov, the World Chess Champion at the time, by IBM’s

Deep Blue computer. Google repeated the feat in 2015 in the game of ‘Go’. These triumphs were widely

publicized and able to be appreciated more widely as ‘shining a light’ on the emerging potential of AI. AI is

now improving daily life in many domains including healthcare, safety productivity and the efficiency of a

multitude of routine organizational and functional tasks undertaken by humans [2]. Progress in AI appears

to be developing systems with increasing degrees of sophistication in “intelligence” and with basic awareness

of their surroundings, e.g. autonomous driving applications. In so doing AI technology is migrating from

its perception as tools or additional functionality in existing devices, e.g. predictive text on smart phones, to

that of an autonomous agent, team mate, assistant or delegate [3] performing complex tasks purposefully

and efficiently, e.g. identifying patterns in CT scans.

This article explains the basic functional unit differentiating AI from existing technology-supported healthcare applications such as tele-health and other protocol driven applications used in healthcare delivery. New terminology which has emerged to describe the direct and indirect components and processes involved in the creation and usage of AI will be defined. Examples of technologies will be discussed in relation to their application in healthcare including their strengths and limitations. These technological developments are being described as a threat to the role of healthcare practitioners by some commentators but an enhancement driving more effective healthcare practice by others [4]. To contribute to that debate requires a clear understanding of the fundamentals of AI. This article aims to provide such perspectives.

Materials and Methods

Over the past hundred years’ technological advances have developed in areas involving ’machines of all

kinds’, for example, machines for dialysis, cardiac monitors, automated recording devices etc. From 1980

onwards the use of computers in healthcare began to emerge in areas such as medical health records,

laboratory assays, patient safety monitors, diaries, alerts, wireless charting, fitness monitor with targets and

feedback, robotics, tele-health etc. Many of these devices perform administrative tasks such as collating

results, scheduling appointments, synchronizing information from different sources, ordering and more.

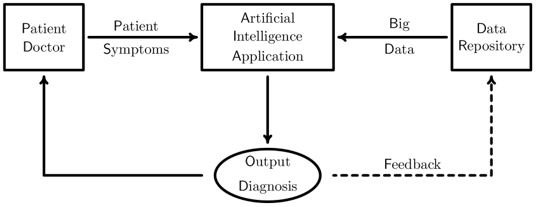

The underpinning operation of clinical decision making technologies are based on flow decision-making

algorithms built around evidence-based practice guidelines (Figure 1). AI driven applications can potentially

improve on these technologies through its ability to assimilate more data with greater complexity and greater

automation and accuracy.

Artificial intelligence-based technologies under development today have their capability built on the many

other technological advances of recent time, and in particular wireless communication, micro-processors,

the internet and its access to resources, and most significantly the ‘quantum leap’ to machine learning, deep

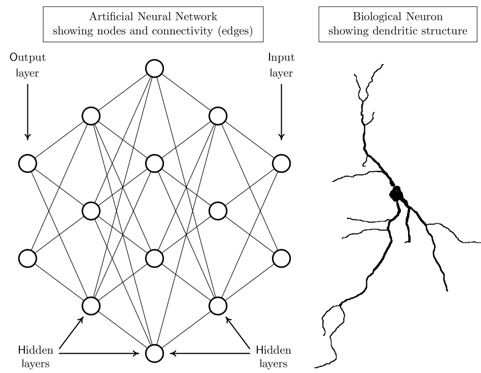

learning and artificial neural networks. The functional unit in AI devices is an artificial neural network

(ANN), the structure and behavior of which tries to emulate the way in which sensory input to a biological

neural network generates an output response, e.g. input signals to our visual system are modified by our

biological neural network to produce guided and controlled movement.

However, an ANN is not a physical entity unlike its biological counterpart. Instead it is a piece of computer software often written in a programming language called Python. The level of hardware required to operate an AI application will depend upon its complexity and function, but common applications such as Cortana and Siri operate successfully on Microsoft Windows 10 and Apple iPhone respectively. Just as a biological neural network processes signals through chains of neurons so does an ANN processes signals through hidden layers of nodes. This direction of travel is motivated by the perception that if AI technology emulates the structure and operation of the human brain, then AI systems should in principle be able to simulate human thinking and creativity.

Figure 2 (left panel) illustrates a schematic of an ANN with three hidden layers in which artificial neurons (or nodes) are represented by circles and connections (or edges) between nodes are represented by lines connecting the circles. Figure 2 (right panel) illustrates a biological neuron and its dendritic structure.

The fundamental difference between biological and artificial neurons is that biological neurons have a treelike (dendritic) shape whereas the nodes of an artificial neural network are mathematical points at which incident signals are summed. Each node then generates an output signal which is transmitted to other nodes of the network using a predetermined rule such as a threshold condition, that is, output is generated only when the aggregated strengths of input to a node exceeds a given predetermined value. By contrast, a biological neuron receives inputs from other neurons over its entire dendritic structure. The shape of the neuron causes these inputs to be combined in a sophisticated way to generate output. To compensate for this lack of geometrical complexity, ANNs need several hidden layers to simulate the behavior of a single biological neuron.

Biological learning is thought to be embodied in the relative strength of interactions between neurons.

The biological process of synaptic plasticity [5] causes repetition of a task, i.e. practise, to strengthen some

interactions between neurons of the network and weakens others. Artificial neural networks emulate this

process by allowing input signals to change the strengths (or weights) of interactions between its nodes. The

machine equivalent of repeating a task is granting an ANN access to more and more input. This paradigm

is called Machine Learning (ML). In principle, the quality of ML can be controlled by either allowing or

preventing the refinement of weights in response to input.

In this context we mention “Cloud Computing”. The Cloud comprises a network of remote servers accessible

over the internet. Registered users of the Cloud pay to store and access data, and to operate programs

hosted on the Cloud and supplied with data via the internet instead of using local resources. The Cloud

is particularly suitable for learning and training AI devices because it can seamlessly interface powerful

computing resources with sources of ‘Big Data’ which is a prerequisite for effective machine learning. It is

anticipated that the next big leap in AI will come with the practical availability of Quantum Computing

which promises such massive increases in processing power that current security protocols based on RSA

encryption will no longer offer security [8].

Deep Learning is a subset of ML and refers to the ability of an ANN to identify and learn patterns and

data representations as opposed to learning specific tasks. An important property of a DL neural network

is that its performance improves as it is granted access to more and more input. AI based on DL makes

decisions based on pattern recognition and data representation [9]. For example, part of the classification in

an AI system to control a vehicle will distinguish persons from road signs from traffic lights from lampposts

among other things. Importantly, some AI applications based on DL have improved to the point that they

can often outperform humans in tasks involving the identification or classification of objects in images.

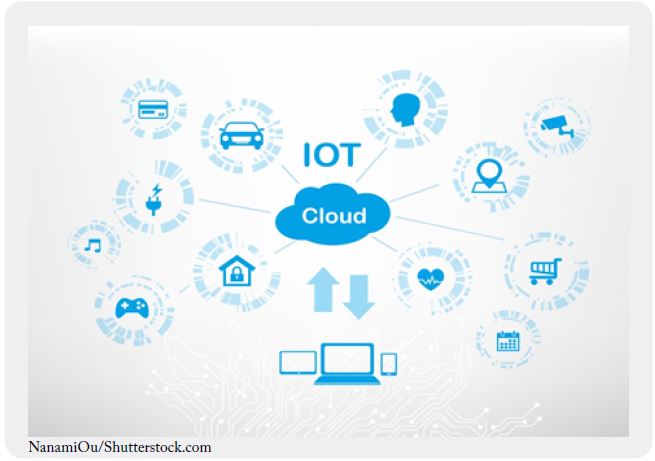

The Internet of Things (IOT) is the collective term for the numerous physical devices that incorporate AI

features and can be classified as Intelligent Electronic Devices (IEDs). Figure 3 illustrates the extensive

range of such devices which include domestic appliances, autonomous vehicles, sensors and switches etc.

all of which have inbuilt network connectivity. The term IED was originally coined by the electrical power

industry and referred to microprocessor-based components with the ability to communicate with each

other and support the mutual exchange of data. Ordinarily AI devices on the IOT work independently

but for specific tasks each can connect with other elements of the IOT. For example, a smart phone or an

AI personal assistant can be used to communicate with other components of the IOT from any location

worldwide and issue instructions for the performance a particular task. It was by means of the IOT that

machines launched their attack on the human race in the sequence of terminator films.

General intelligence is concerned with teaching machines how to learn rather than making teaching a

task oriented exercise. Unsupervised learning, by which is meant intelligence that can be applied to any

task without instruction, is thought to be an important contributory factor to the development of general

intelligence. During unsupervised learning a DL neural network is granted unlimited access to data which

can be without pattern (unstructured) and without attached meaning (unlabeled). AI will use DL to identify

and classify patterns in the input. These learned patterns then form the basis for decision making [9].

Within the context of machine general intelligence an AI based collaboration between the AI Engineering service provider FaktionXYZ [10] and the advertising agency DBB [11] has developed a prototype application called Pearl.ai [12]. The objective of this collaboration is to develop a trustworthy and impartial AI program which can teach itself to identify the ingredients of what humans recognize as creativity with the ability to improve its learning as it is granted access to more and more relevant data. Although developed initially for use in assessing the best and most successful advertising campaigns worldwide [12], the ultimate objective of this initiative is to expand the tool for wider use. Put simply, the underlying objective of Pearl. ai is to learn to think like a human and to recognize creative human thinking in whatever is its chosen specialism. One of its many challenges to be overcome is the definition of what human’s regard as being creative, and what are considered to be the elements of creativity. One essential ingredient is the ability to think “out-of-the-box” by which is meant the ability to achieve a task by combining apparently disconnected information from entirely different disciplines.

Most applications of AI are task oriented by which is meant that the system is trained to perform a particular

task using supervised DL. Supervised DL is associated with structured and labeled input and output. An

autonomous driving program is a typical example of a supervised DL application in which the AI system is

fed with labeled video and photographic evidence together with numerical input drawn from its environs.

Input data to an AI system are typically audio, visual, textual or numerical or a mixture of these while the output is some classification of the input. The amount of data required to train an AI system using DL can be huge principally because of the difficulty in refining networks with large numbers of weights. For example, many millions of labeled images and thousands of hours of video footage are needed to train an autonomous driving system [13].

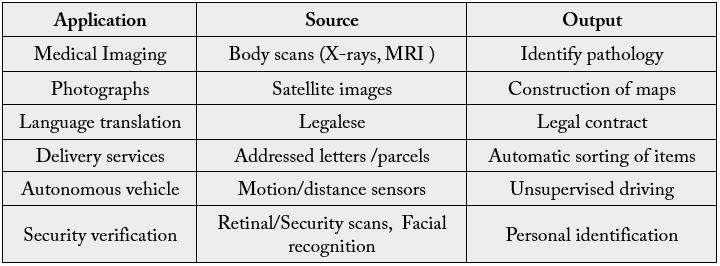

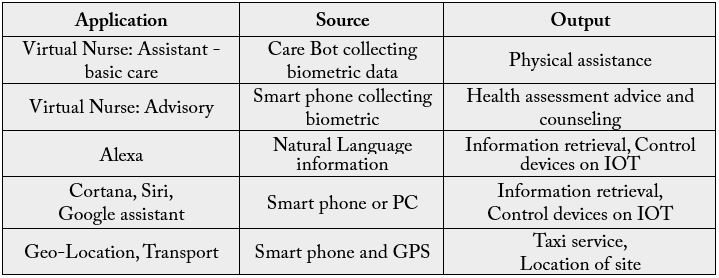

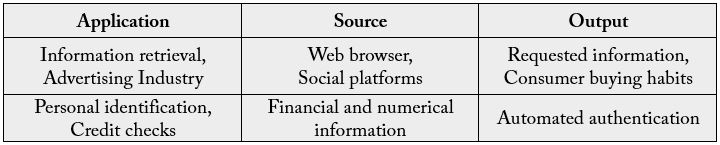

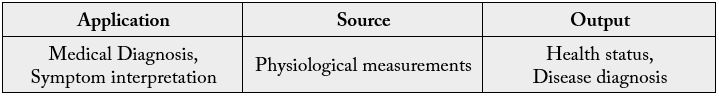

The following list summarizes the functional domains of common AI devices used in healthcare and other industries.

An AI system trained to read text would receive input reflecting many representations of letters of the

alphabet and their styles of connectivity, e.g. written text, together with labeled output. The supervised

training sensitizes the weights of the underlying ANN to the different data representations inherent in

individual characters leading to accurate output when presented with unseen text. An AI system of this type

could underlie language translation, automatic sorting of addressed material in a postal or delivery system

among other useful applications.

Natural Language Processing (NLP) is an application of AI in which machines try to understand natural

written and spoken narrative. The objective of NPL is to allow machines to converse with humans using

everyday language which a human would regard as having meaningful content. NLP is central to applications

such as Cortana [14], Siri [15], Alexa [16] and Google’s AI assistant [17] all of which aim to obtain

requested information for a human user on voice command, and transmit it to that user in an intelligible

form. A less obvious but very important use of NLP occurs in the healthcare industry, where dialogue

between patient and healthcare professional is a central component of its operational effectiveness. This issue

will be addressed in detail in a later section.

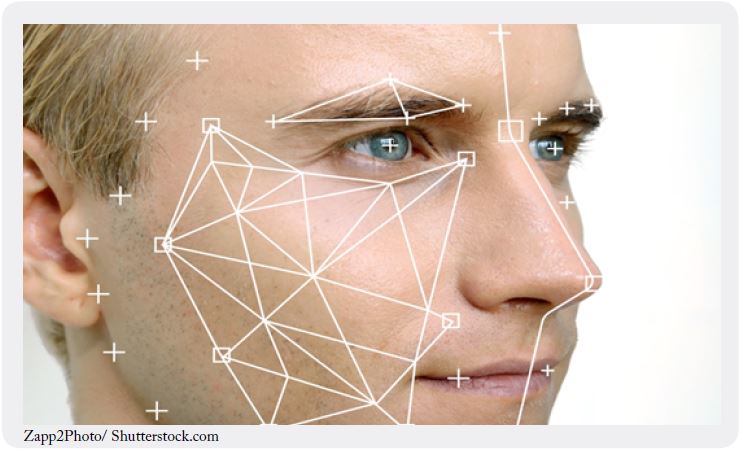

AI systems using computer vision to control autonomous vehicles are now in the mainstream of public and

commercial interest. Other important applications include facial recognition and image recognition. Facial

recognition is already used for the confirmation of identity at self-service border crossings and is currently under development as a reliable indicator of identity consigning to history, among other things, the need for

pin numbers as security identifiers. Figure 4 illustrates that the input to an AI system configured for facial

recognition consists of a three dimensional geometrical characteristics of the face. AI then analyses the data

for deep rooted patterns analogous to a finger print. These patterns are then compared against a previously

verified set of patterns for that individual held in a secure database.

Automated image recognition will revolutionize the world of medical imaging being less costly than employing humans to do the job and also more effective in terms of its speed and ability to identify potential abnormalities: it never gets tired and can operate 24/7.

Many devices are now available on the market to monitor a range of physiological measures related to

health status. The devices or transducers provide an interface between biological, motor and sensory

functions to convert biologically-derived data into electronic signals. These commonly are constructed from

nanostructures into electrodes, chips, biosensors or bio-computing devices allowing electronic systems to

interact with biological systems. Established areas of monitoring as in vital signs (BT, BP, HR, RR) are

measured using sensors worn by an individual. Physical activity, nutritional intake, sleep and many more

human activities can be directly or indirectly captured electronically. These data can be utilized through

trained AI devices.

A summary of the interfaces of text, voice, vision and biometrics and its utilization in AI are represented in many common applications.

Structured Visual Input

Voice

Text

Biophysical

Uses of AI Facilitated Machines in the Healthcare Industry

Healthcare is arguably the industry for which AI will potentially have the largest long-term implications

because of its traditionally heavy reliance on human labour in the medical, caring and administrative sectors.

It is anticipated that many repetitive tasks currently undertaken by humans will be delegated to AI systems

in the future. The Harvard Business Review [18] estimates the total potential annual value of the ten most

promising applications of AI in the healthcare industry at $158B by 2026 of which approximately half will

come from robot-assisted surgery ($40B), virtual nursing assistants ($20B) and administration ($18B). Prior

to reviewing individual opportunities for AI in the major sectors of the healthcare industry, we note that

IBM Watson has the capability of contributing to all sectors of the industry, and to the healthcare industry

in particular.

IBM Watson [19] is a supercomputer combining the processing power of 90 servers hosted on ‘the Cloud’

and using IBMs DeepQA artificial intelligence software to answer questions directed to it. DeepQA uses

natural language processing and DL to access and retrieve information from a storage repository exceeding

200M pages of information [20]. Watson operates on unstructured data and so is unlimited in the nature

of the task to which it can be put. In the context of healthcare Watson enables care givers and medical

professionals to assess Big Data via the Cloud. Uses include analyses of medical literature and access to

clinical data from a database of electronic medical records, among others, with the overarching objective of

providing care based on best available evidence.

For example, AI technology successfully identified two possible drugs to be used in the treatment of Ebola from information related to the virus, its impact on humans and what treatments might exist in previous scientific investigations that may prove effective [21]. Applications of AI in cancer research can analyze data in clinical notes for possible treatment pathways. AI has also been developed for personal use in seeking a second opinion on medical diagnosis and treatments [22]. AI, however, does rely on existing criteria for judging the scientific value of information its sources such as impact factor or number of citations of journals which may not be the best or most appropriate/trustworthy ‘gold standard’ in the context of clinical care. This may not always be what is best in the case of an individual patient and requires the clinicians’ expertise to interpret and judge what is best in a case by case basis.

The organization of healthcare services is recognized to be hugely complex with different perspectives

depending on your personal or organizational relationship.

From the healthcare practitioner and consumer perspectives some of the key functions being advanced through the use of AI are in the following areas. A macro scale synchronization of services can be achieved ranging from staffing complement across specialties, patient appointments, staff rosters, support services, laboratory services, imaging etc. can all be ‘intelligently’ managed using AI supported technology and linked to the individual elective appointment systems for consultations, investigations and treatments. Scheduling of appointments can also take account of the many characteristics known about users of healthcare services (through intelligence on previous users) i.e. patients that are known to miss appointments, dates/times associated with poor attendance and specialty biases. Therefore, a wide range of patterns of utilization can be identified and interventions introduced to improve on low performance areas.

At the administrative/clinical interface in hospital settings, AI can mannage the scheduling of nursing rotas, the assignment of patients to rooms, the management of patient appointments, act as a triage nurse, take decisions concerning patient admissions, manage financial matters such as patient billing and the reordering of medical consumables etc.

The creation of an electronic health record (www.healthit.gov) provides a digital version of a person’s health

record, demographics, health medical interactions, consultations, interventions and ongoing real-time

medical care. AI will allow an individual’s medical records to be available to authorized users securely at

different points in the healthcare system. It should allow a seamless flow of information within a functioning

digital organizational infrastructure allowing improvements in coordination, completeness of care records,

accessibility and availability to name only a few of the advantages in comparison to the traditional paper

medical records and charts systems. AI can be linked to population data, evidence-based treatment guidelines

and can promote patient engagement in their healthcare management through health-related lifestyle

activity portals linked to medical records.

These data are also a rich source of information to train AI in the ‘health of the population’ as there will be access to dynamic health data sources. However, many issues relating to its usage and trustworthiness need to be examined alongside issues of privacy, individual autonomy, justice and equity to name only a few of the challenging perspectives on use of healthcare data.

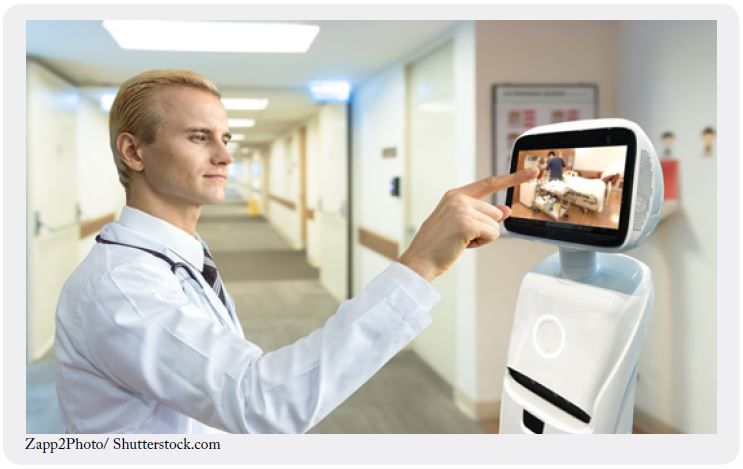

The healthcare assistant type of application can range from Healthcare Bots working with healthcare

staff to perform routine non-complex nursing duties such as position changes, assisting with the physical

environment and with meals and fluids as illustrated in Figure 5. These Bots can also operate autonomously

and are designed to provide services such as helping patients with infirmities to stand up, go to the toilet and

take a bath etc. Robear [23] is an example of a robotic assistant with these capabilities.

Other type of applications, often called ‘Virtual Nurse’ assistants, can communicate with patients using natural language and respond in line with a word or phrase cue. These assistants are designed to converse with patients in a way that is responsive to the patient’s physical and emotional wellbeing. In the course of conversation their role is often to look for early signs of age-related problems such as dementia while simultaneously collecting and recording the patient’s current state of wellbeing and clinical data as appropriate.

Such ‘intelligent’ devices can also be programmed with the ability to scan communal patient areas and identifying behaviors or activities of concern, intervening with messages or feedback e.g. time of forthcoming events or calling for assistance as they deem necessary. There is the capacity for them to distinguish among different behaviors identifying different actions depending on the cues.

In addition to physical ‘human’ like robotic type devices there are also assistant services performed via telephone applications. One leading development in this area is Molly, a Virtual Nursing assistant developed by Sensely [24,25]. Molly integrates emotion recognition and analytical tools with an algorithmic component containing rules for diagnosing symptoms and dealing with patients with chronic illnesses such as heart disease, diabetes and other age-related problems.

In AI device classification Molly is known as an ‘Avatar’ by which is meant a software representation of a human. Its acts as a ‘communication centre’ between a patient, information and a range of services with the additional capacity to interact through voice and provide the basics of a social/therapeutic interactions. For example, Molly will call patients on their smart-phone as per an agreed schedule, enquire about how they are feeling and is programmed to respond to a patient’s mood and emotional state in addition to their symptoms. Clearly this would be suitable only for patients with the capacity to manage telephone calls. Molly will alert healthcare providers when she decides that a patient may need health counseling or exhibits anxiety about side effects of prescribed medications or lifestyle changes etc. Information collected during each brief conversation is incorporated automatically into a patient’s medical record together with data from various medical devices and internet-connected hardware used by that patient in the course of everyday living.

Molly allows medical practitioners to monitor a patient’s health and wellbeing thereby reducing/preventing hospitalization events. Molly nurse eliminates the time consuming process of recording patient data, never becomes fatigued and so can manage more patients than a human nurse while simultaneously controlling the cost of quality healthcare and lessening the impact of the shortage of skilled nursing staff in an aging population.

Many similar types of devices that can provide health/social care for people are evolving as enablers and support devices for independent living. These applications are likely to find greatest impact in the community healthcare setting which is continuing to grow as the largest sector of healthcare delivery [26].

This area of hospital care is using the most advance forms of technology in its monitoring and management

of patient care. Case records, summaries of real-time physiological monitoring and reports from multiple

disciplines such as biochemistry, pathology, radiology, nursing and AHP, medicine etc. are collated

electronically into synchronized documentation in many facilities. AI devices monitoring patients can use

these multiple sources of data to detect patterns of changing health status and early warning indicators of

many types of impending health problems with the ability to discriminate between important and nonimportant

changes.

Robotic-assisted surgery typically consists of robotically controlled arms to which are attached various

surgical instruments and a high definition camera providing a three dimensional view of the surgical site

[27, 28, 29]. Figure 6 illustrates two surgeons working together to control surgical robots from nearby

computer consoles. An important strength of robotic surgery is the fact that the patient and the surgeon can

be at different locations as in telemedicine facilities allowing surgical procedures to be done remotely. The

primary advantage of robotic surgery is the level of precision it offers the surgeon when performing complex

or delicate procedures some, of which would otherwise have been difficult or well-nigh impossible using

conventional methods. Robotic surgery is also often minimally invasive resulting in reduced risks of infection

and complications, faster recovery times and less scaring than traditional procedures. The integration of AI into direct surgical applications is mostly at the research stage with developments in skin suturing being

closest to becoming a practical reality. Other areas being examined are in surgical workflow, covering

the complexities of staff and procedure scheduling together with pre-and post operative preparation and

monitoring. Further enhancements in telemedicine are also predicted.

It is anticipated that AI systems based on deep learning will in the future systematically replace the bulk

of human expertise with regard to the scanning of medical images such as MRI and CT scans, ultrasound

and X-ray images with regard to the identification of abnormalities. AI systems will allow faster diagnostics

reducing the time patients wait for a diagnosis from weeks to mere hours thereby accelerating the introduction

of treatment options. Computers do not get tired, and with their systematic exposure to more and more

images, they can be expected to deliver a level of reliability exceeding that of a trained human operator.

Healthcare spending in the US is approximately $3.5T or approximately 18% of GDP [30]. Harvard

Business Review [18] estimates the potential annual value of AI in the detection of fraud at approximately

$17B by 2026. While this sum might seem a surprisingly large investment for a non-healthcare related service, the FBI estimates that the current loss of healthcare spending attributable to fraud and abuse of

federal wide assurance ranges from $90B to $300B! [30].

Errors in prescribing are frequently correlated with errors in diagnosis and can result in significant costs

due in large part to financial penalties. Prescriptions are normally issued to patients on the basis of several

questions from the physician accompanied by a medical examination which is often brief due to pressures

of caseload.

AI assistants or Chat Bots can relieve some of this pressure by dealing with routine casework. These robots can converse with patients in natural language, compile a picture of a patient’s state of health and provide them with a personalize program of healthcare. Chat Bots have immediate access to an encyclopedia of medical knowledge, and should be less prone to misdiagnosing symptoms thereby reducing the likelihood of medical errors while simultaneously enabling the physician to devote more time to patients with serious ailments. An AI assistant has access to a patient’s medical history which it simultaneously analyzes during the course of conversation looking to identify potentially serious illnesses which can be treated effectively or prevented if discovered in their early stages of development. The diagnostic performance of AI assistants can rival or surpass that of humans in the early diagnosis of skin cancer and eye conditions threatening severe loss of sight, among other illnesses.

The use of Chat Bots is not confined to the healthcare industry. Chat Bot designed interfaces supply customer services in a range of commercial, banking and entertainment industries, and are often pursued as the first line in the customer – business interface.

A critical concern in the use of AI systems in general, and healthcare in particular, is the trustworthiness

of their abilities to contribute to excellence in the quality of patient care. An AI application could be

untrustworthy because the algorithm central to its function is in error either by design or deliberately as

happened with the Volkswagen emissions scandal [31], or because the training data itself are untrustworthy.

The performance of an AI system using DL will improve by granting it access to increased volumes of data

(section 2.4). Some of this data may be generated by other AI applications operating in the same sector of

healthcare. Consequently, feedback is a serious concern.

Put simply, the optimal pathway for AI to improve its performance is to slant outcomes in favor of previously predicted outcomes, many of which could have been generated by similar AI systems, i.e. as time progresses there is a danger that the data will increasingly supplants the healthcare professional. A number of other issues concerning the use of AI in healthcare are listed in no particular order.

• Central to the effectiveness of AI in healthcare is the alignment of expectations for each participant with

reality.

• Is it desirable to have machines advising patients, particularly if patients get the impression that the

machine has not fully understood the gist of their conversations leaving them frustrated and annoyed?

• Patient privacy is a concern because the machine is granted access to personal information and health

records. What safeguards must be put in place to satisfy legal requirements and be assured that data security

and access are restricted?

• Accountability is a concern that affects all AI applications, and in particular AI applications managing

critical services because a machine itself cannot be held accountable for its recommendations/advice.

Machines could make decisions based on collective uncheck/unverified data. Clarity on endorsement of AI

output information, clinical diagnoses and proposed managements is required.

• Who monitors the performance of machines when they have been devolved to operate remotely: another

machine? Experience warns that AI applications can have unexpected and unintended consequences.

• In reality the machine acts as a “third party” in the patient-doctor relationship and can challenge the

dynamics of responsibility; who should the patient believe in the face of conflicting advice/diagnosis? Will

this increase patient anxiety, will the doctor be equipped or have the time to respond and explain additional

inputs from the AI device? Will this detract from the care of the wider population of people with healthcare

needs?

• Stewardship to ensure transparency in design, training in particular data sources used, deployment and

evaluation should be a central feature of the development and implementation processes. Furthermore, issues

of accountability in decision-making and understanding of the reasoning processes should be made explicit.

Systematic, relevant to purpose, quality evaluation metrics should be in place for co-aligned monitoring of

effectiveness of real-time performance of the AI powered devices.

Challenges of AI

This article provides an overview of the concepts, creation of AI with its operating strengths and weaknesses

highlighted as the topic area is reviewed. Because AI learns from the input data (both in set up and in its

use in practice), then the primary limitation of AI technology necessarily lies in the quality and reliability

of this data. What you put in is what you get out. Untrustworthy input, by which is meant input that is

not representative of the task to be achieved, will generate untrustworthy output. For example, training

an autonomous driving application on trunk roads provides untrustworthy learning because the input is

unrepresentative of actual usage and real driving conditions.

AI in its current form has no self-awareness or consciousness, nor can it assess risk implicit in its actions. Society must be concerned if AI applications take over control of critical services from humans. For example, AI systems trading stocks created a sudden unpredicted crash during which several important stock market indices lost and recovered about 10% of their value in little over half an hour’s trading [32]. The incident is a reminder that unanticipated events can occur with AI applications as in human managed systems, but the unknown of how it will react in uncertain circumstances poses a serious weakness.

AI technologies currently have long lead times from concept to practice, are expensive to develop, require massive computing resources and consume significant amounts of energy and human capital. Expertise available to contribute is much less than is needed, but is developing as many educational institutions initiate programs to rapidly building provision in this field. This expansion of employment might offset to some degree the predicted job losses in those areas most affected by AI operations. Another serious challenge will be the maintenance of AI systems. The past and present are littered with IT failures, for example, the TSB [33] upgrade fiasco and the chaos resulting from the failure of the Welsh NHS IT provision [34], both occurring in very recent times.

Dickson writing in Artificial Intelligence [35] describes a number of challenges facing the AI industry.

These can be classified loosely as ethical, practical or legalistic. AI must deliver significant net benefits to

society by way of compensation for lost employment which raises the important questions of how to replace

jobs lost through automation and how to distribute the benefits of AI to society in a fair and equitable way.

Practical challenges concern ensuring the trustworthiness of input data fed to AI systems, how to improve

the effectiveness of the learning process and reduce learning times. Legal issues arise through assigning

responsibility for failures of AI technology. Human blame is usually relatively easy to apportion, for example,

in a traffic accident but is less clear for autonomous vehicles.

Treffiletti [36] believes that AI in its current form is rudimentary. There is a significant risk that expectations will outstrip what current implementations of AI can reasonably achieve. Allied with unrealistic expectations is the over hyping of what AI can actually do. Commercial concerns may feel compelled to embrace AI in their business, but for many the outcome will be automation by another name.

Marr [37] points out that the computational demands of AI cannot meet the annual growth in the volume of input data, and this mismatch is likely to persist until new computing paradigms like quantum computing becomes a practical reality. There is also a serious shortage of human capital with the ‘knowhow’ and skills to fully develop the power of AI. Marr also points out that in practice AI behaves with the obscurity of a ‘blackbox’. The causal connection between input and output is opaque, but for society to trust the technology requires that its solutions are seen to be effective and trustworthy.

Although AI has made remarkable progress from its inception in applications involving visualization, natural speech and the ability to play difficult, but essentially algorithmic, games nevertheless the ambition of creating machines with artificial general intelligence that can think like humans with human creativity seems as far away as ever. Indeed, recent research in AI has largely focused on extending and improving task oriented applications [38]. By contrast, humans can think out-of-the-box by which is meant a discontinuous pattern of thinking in the sense of deploying information learned elsewhere and apparently unconnected with the performance of the task at hand.

Pearl and Mackenzie [39] propose a new paradigm for research in the quest for artificial general intelligence based on the notion of “causal reasoning”, namely the ability to understand “the whys and hows of a situation”. They make the point that the efficacy of Deep Learning as currently configured is based on correlation and association, which are often wrongly regarded as metaphors for causality [40]. Pearl and Mackenzie illustrate their point with reference to an AI application fed with the times of sunrise and a cockerel’s crowing. The cockerel always crowed before sunrise and so the AI application concluded that it was the cockerel’s crowing that caused the sun to rise.

Conclusions

This article has compared the structure of artificial and biological neural networks, and explained in overview

how Deep Learning seeks to simulate the biological learning process in human development, namely that

we learn by practise. Important applications of artificial intelligence in the world at large, with particular particular reference to the healthcare industry, have been reviewed. Training and education across all levels

of engagement with this technology is required. In addition, individuals (healthcare practitioners, patients,

carers, general public) interacting with autonomous machines will require technical and scientific support to

engage meaningfully with a clear understanding of the strengths and limitations in their scope.

The drive to introduce AI technology at all levels of society will have increasingly important implications for the employment of persons doing valuable, but nevertheless routine, work. How society should redeploy such persons and/or distribute in an equitable way the benefits to society from the ubiquitous expansion of AI remains an important, but open question. AI in healthcare promises large scale efficiency savings across the entire sector, both financial and in the use of human capital, while simultaneously maintaining a high quality of service for patients. However, as with the introductions of all new processes and procedures, it is only with time that unpredictable and unintended consequences of the new technology will begin to surface.

While AI applications will continue to invade every corner of human living, there is a growing doubt in the AI community that current paradigms for learning cannot capture the essence of human thinking and creativity. The monster image of AI as portrayed in the sequence of Terminator films is as distant as ever, and arguably society should be grateful for this lack of progress. Perhaps AI is hitting the boundary experience by other scientific endeavors, namely that it is concept that leads technology and not the reverse. What is needed is a better understanding of the physical processes in human development that are responsible for intangible concepts such as consciousness and cognitive thinking.

Bibliography

Hi!

We're here to answer your questions!

Send us a message via Whatsapp, and we'll reply the moment we're available!